In a digital landscape that prizes immediacy and intimacy, the arrival of Talk Dirty AI-style systems has accelerated a cultural and commercial shift. Entrepreneurs and product teams are adapting to a world where conversational agents can simulate flirtation, erotic storytelling, and personalized companionship while navigating regulatory red lines. This piece follows Maya, founder of a small startup called Luna Labs, as she explores product-market fit for an adult-oriented conversational feature set. Along the way we examine technical architectures, moderation pipelines, SEO and discoverability strategies, legal exposures, and monetization models that matter to founders in 2025. Expect concrete examples, checklists, and a realistic assessment of risks alongside growth tactics. Practical references include studies on zero-click search, evolving search engine updates, and knowledge-management integrations that alter how users find and interact with explicit conversational services. For any entrepreneur balancing trust, safety, and growth, the stakes are high: reputation and revenue hinge on design choices that respect users and regulators while delivering the intimacy modern audiences seek.

How Talk Dirty AI is reshaping online conversations and moderation in 2025

Maya launched Luna Labs to test a suite of features that leaned into sensual, AI-driven interactions. She quickly noticed two realities: users wanted far more personalization than canned replies, and platforms responded with stricter moderation patterns. The tension between personalization and safety defines the current market.

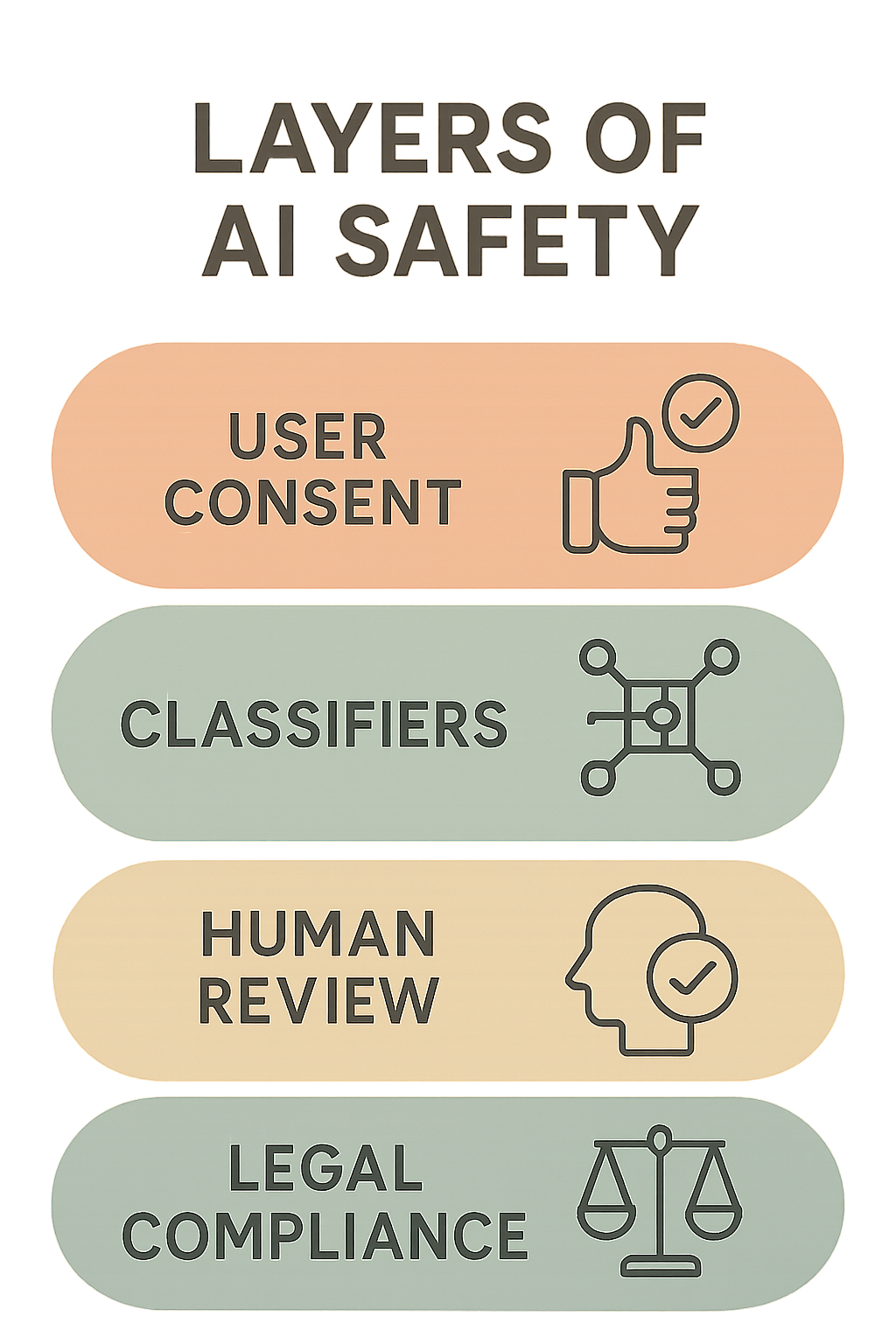

At a technical level, modern systems blend large language models with specialized safety layers. Core models — whether built with research from OpenAI or derivative architectures inspired by DeepMind work — can generate rich, contextual responses. Teams overlay classifiers, intent detectors, and content filters to manage explicit content and legal exposure.

Key components of contemporary explicit chat stacks

Successful stacks typically include:

- Preprocessing: user intent extraction and age gating.

- Core generation: a language model tuned for style while constrained by policy.

- Safety filters: multi-layer classifiers to detect sexual content, minors, and exploitative scenarios.

- Post-processing: style moderation and personalization tokens.

- Audit trails: logging for compliance and quality review.

Those pieces are necessary, but not sufficient. Platform rules and third-party integrations complicate deployment. For example, voice integrations may use systems resembling WhisperBot to transcribe audio; image or avatar features require separate moderation.

Table: comparative risk and mitigation summary for dirty-chat features

| Feature | Main Risk | Mitigation |

|---|---|---|

| Text-only erotic chat | Policy takedowns; user safety | Robust NSFW classifiers; explicit consent flows |

| Voice/ASR interactions | Age verification, transcriptions leaking | ASR filters; server-side transcription audit |

| Avatar/visual flirtation | Deepfake/consent misuse | Image provenance checks; user agreements |

| Third-party publishing | Platform bans | Sandboxed product; clear terms |

Maya found that explicit labels and clear EULA clauses reduced complaints and improved retention among consenting adults. She also integrated a targeted onboarding flow explaining the product’s limits. That transparency matters: users who know boundaries tend to stay longer and spend more.

- Tip: Separate explicit flows from general chat to avoid accidental exposure.

- Tip: Keep logs and consent timestamps for legal defensibility.

- Tip: Localize moderation rules for jurisdictions with different decency standards.

Search behavior changed too. With search engines experimenting with synthesized answers and “zero-click” results, entrepreneurs must adapt SEO to bring users who intend to interact rather than merely read. Resources like the analysis of “search without clicks” help product and marketing teams refine acquisition strategies; see research into zero-click trends here: search without clicks study.

Regulatory frameworks and platform policies evolve rapidly; staying informed about major updates such as Google’s core updates matters. For entrepreneurs, linking product design to search dynamics and policy shifts reduces sudden traffic drops. Read coverage on algorithm changes and implications here: Google core update.

Final insight: Robust stacks combine model capability with deliberate, user-centered moderation and transparency to scale erotic conversational products while minimizing platform and legal risks.

Design patterns: personalization, safety trade-offs, and business models for DirtyDialogue platforms

Designing for intimacy demands intentional trade-offs. Maya experimented with persona layers that made conversations feel intimate without crossing consent or age boundaries. This involved modular persona templates, session-limited memory, and explicit opt-in for sexual content.

Below are core design patterns that matter for products like SexyChatGPT and SpicyAI:

- Persona separation: maintain clear binding between persona and safety filters to simplify audits.

- Scoped memory: let users choose what the AI “remembers” and support periodic purges.

- Graduated explicitness: include tunable sliders so users control tone from flirtatious to explicit.

- Hygiene checks: automated checks to block underage cues or non-consensual scenarios.

- Monetization-safe paths: ensure premium features don’t violate payment processor policies.

Monetization and platform constraints

Choosing a revenue model must account for policy friction. Subscription, pay-per-session, and microtransactions are common, but payment partners often restrict explicit services. Maya avoided direct billing for explicit content by offering “mood modules” as benign-sounding upgrades and running explicit content behind authenticated, age-verified gateways.

Examples of practical approaches:

- Freemium entry with explicit flows gated behind age verification and separate billing partners.

- Token-based sessions where tokens are marketed generically (e.g., “premium tokens”) and explicit content is consumed within the gated environment.

- Affiliate partnerships with adult platforms that have mature-payment stacks to avoid processor rejections.

Policy and compliance require layers of automation plus human review. Systems using classifiers trained on curated datasets can catch many violations, but human moderators are still necessary for edge cases. Luna Labs created a “tiered review” model: automated rejections for clear violations, semi-automated queues for borderline cases, and human review for appeals.

Operational checklist for builders

- Implement multi-factor age verification and store hashed consent stamps.

- Establish rate limits and session caps to deter grooming or abusive behavior.

- Create a transparent reporting and appeals process visible to users.

- Document content policies and publish community guidelines to set expectations.

- Maintain a whitelist/blacklist of sensitive phrases adapted per locale.

From an engineering standpoint, model fine-tuning for erotic tone involves curated prompts and constrained decoding. Techniques like reinforcement learning from human feedback (RLHF) can align outputs with allowed behaviors. However, the more tuned a model is to sexual content, the higher the risk of policy conflict with providers or hosting platforms. That’s where white-label or self-hosted deployments come in handy, but they add cost and complexity.

Maya also watched search trends for discovery signals that favored immersive experiences. The shift among younger cohorts from search engines to social platforms requires cross-channel acquisition playbooks. Read about this generational shift toward social discovery here: Gen Z search habits.

Final insight: The best products separate persona from policy, fund human review, and choose monetization models that align with payment-provider constraints to sustain growth.

SEO, discoverability, and TalkTech opportunities for entrepreneurs using FlirtyTalks and SultryAI

Visibility is tricky for adult-oriented conversational products. Search engines and social apps often apply additional scrutiny to explicit content, and the emergence of AI-generated summaries in SERPs has changed click behavior. Entrepreneurs must craft a discoverability strategy that respects platform rules and leverages emerging tools.

Three levers deliver results for Luna Labs as it tested niche acquisition strategies:

- Content differentiation: publish editorial pieces that address intimacy technology without explicit examples, driving safe organic traffic.

- Search intent mapping: prioritize queries with transactional intent where users seek products, not purely erotic content.

- Platform partnerships: collaborate with adult-friendly marketplaces or privacy-respecting platforms for referral traffic.

Technical SEO and AI-friendly patterns

Search engines increasingly reward pages that demonstrate expertise, authority, and trustworthiness. For a product like FlirtyTalks or SultryAI, that means transparent policy pages, clear age verification signals, and enterprise-grade security statements.

Key technical SEO steps:

- Structured data for product pages that emphasize safety and age gating without presenting explicit content.

- Server-side rendering for public pages to ensure crawlers index essential trust content.

- Content hubs that discuss relationship education, consent, and AI safety to earn authoritative backlinks.

Search engine dynamics in 2025 also require a response to synthesized answers and knowledge panels. Products must prevent inadvertent exposure by ensuring explicit content is never accessible to crawler bots and by creating safe landing pages that funnel consenting adults through verification. See insights on AI-driven knowledge management that can reshape content discovery here: Nolej AI knowledge management.

Toolbox: interactive risk-and-traffic simulator for adult chat features

How “Talk Dirty AI” Impact Simulator — 2025

Interactive simulator: enter monthly traffic and behavioral rates to estimate signups, moderation load, revenue and takedown risk. All values editable — results update live.

Use cases for the simulator:

- Estimate moderation FTE needs based on projected traffic.

- Test acquisition scenarios: organic vs. paid vs. referral.

- Simulate revenue under subscription and token models while factoring in churn from incidents.

Aside from organic search, social channels can drive high-intent traffic when approached correctly. That means content marketing that frames your product in relationship, therapy-adjacent, or technological terms rather than pure erotica. For a primer on local and multi-location optimization that informs regional strategies, read: multi-location SEO guide.

Finally, product teams should monitor broad search engine updates. Major algorithm shifts can rapidly affect discoverability. Coverage of Google’s June update provides practical lessons in volatility management: Google June 2025 update.

Final insight: Combine safe public content with gated, compliant experiences and use simulation tools to size operations and forecast ROI while staying agile to search algorithm changes.

Ethics, legal exposure and moderation strategies: balancing NSFWPrompt consumer demand with compliance

Ethical design is not optional for explicit conversational services. Luna Labs assigned a legal-and-ethics lead to map obligations across jurisdictions and to design a proportional moderation approach. This section covers the legal landscape, ethical design heuristics, and pragmatic moderation workflows.

Legal exposure is multi-dimensional: data protection laws, pornography statutes, age verification rules, and platform-specific policies. Entrepreneurs must build compliance checklists per market. For example, the EU has stricter data processing and child-protection standards compared to many other regions.

Practical moderation architecture

Layered systems are the most practical. Maya implemented a triage pipeline:

- Automated classifiers flag clear violations (sexual content involving minors, explicit violence, or non-consensual cues).

- Context-aware heuristics use conversational history to reduce false positives.

- Human review for appeals and low-confidence cases.

Moderator wellbeing is also an ethical concern. Luna Labs limited shift lengths, anonymized data exposure, and provided counseling benefits. These operational choices retained moderators and reduced turnover.

Handling NSFWPrompt vectors and abuse

Users often attempt to bypass filters using obfuscation or roleplay. Defenses include phrase normalization, semantic detection, and adversarial testing wherein QA teams try to break the system. Automated systems should be retrained periodically using real-world attempts to ensure robustness.

Case example: a user tried to coax sexual content involving a minor by indirect phrasing. The pipeline flagged contextual red flags and escalated to human review, which resulted in a permanent ban. That incident updated the training data and improved the classifier’s sensitivity.

- Keep an evolving list of obfuscation patterns and slang per locale.

- Use counterfactual testing to validate filters against new prompts.

- Apply stricter thresholds on edge-case content and escalate quickly.

There are reputational risks beyond legal ones. Public exposure of moderation failures can be catastrophic. That’s why transparency reports and a clear appeals process are valuable trust signals. Luna Labs published periodic summaries that explained moderation volume and outcomes without exposing sensitive user data.

Another dimension is technology provenance. Platforms weigh models differently; some providers explicitly ban erotic use. Projects must choose hosting partners and model providers consistent with their intended use-cases. If a firm intends to run a product akin to DirtyDialogue or SultryAI, using self-hosted stacks may be necessary despite greater operational cost.

Finally, entrepreneurs should track how search engines summarize pages. Google’s increasing usage of AI summaries can reduce referral traffic. Read analysis of how AI summaries impact web traffic here: Google AI summaries and traffic.

Final insight: Ethical, legal, and human-centered moderation strategies are as important as model quality for sustaining an explicit chat product in the long run.

Practical playbook: launching a compliant SexyChatGPT or SpicyAI experience that scales

Launching requires coordinated work across product, legal, engineering, and marketing. Luna Labs’ launch checklist evolved into a repeatable playbook. Below are tactical steps founders can apply today.

Pre-launch checklist

- Market validation: run anonymous concept tests to measure willingness to pay and feature preferences.

- Policy mapping: document constraints of hosting, payment, and distribution partners.

- Safety-first MVP: build an initial experience that prioritizes consent flows and visible safety controls.

- Monitoring and rollback: implement automated rollback triggers for sudden spikes in abuse reports.

- SEO and content plan: prepare safe, informative landing pages and authority-building content; consider multi-local SEO tactics as explained here: local SEO in Swiss Romande.

Launch phase tactics:

- Start in friendly jurisdictions to validate UX while limiting legal complexity.

- Use invite-only rollouts to control growth and refine moderation workflows.

- Measure quality with both quantitative metrics (churn, session length, report rate) and qualitative review (moderator annotations, user feedback).

Operations scaling:

- Automate routine moderations; keep humans for nuance.

- Document escalation paths and legal notices for takedown requests.

- Integrate analytics that segment high-risk sessions for preventive action.

Maya also monitored macro shifts in platform behavior. For instance, when Google rolled out a major algorithm change, she prepared content and technical fixes in advance. Read coverage of this kind of update here: Google core update June.

Branding and naming matter. Using euphemisms can help avoid initial rejections from stores or ad networks, but authenticity sells. Names like FlirtyTalks or SexyChatGPT clearly signal purpose, but you may choose softer parent-brand positioning with explicit modules in a gated area.

Payment and monetization deserve special attention. Maya’s choice to use privacy-focused billing partners and tokenized microtransactions lowered chargeback risk. She partnered with adult-friendly affiliates rather than mainstream merchant processors for explicit purchases.

Lastly, keep an eye on adjacent tech: transcription tools and voice interfaces (think WhisperBot-style systems), embedding tools, and knowledge-management integrations can expand product scope. Tools like Nolej AI reshape how content and training data are organized; consider their impact when designing moderation and training pipelines: Nolej AI transformation.

Final insight: A launch that balances cautious compliance with strong product-market fit, supported by robust moderation and SEO playbooks, can scale sustainably even in a challenging regulatory environment.